Concerns are growing in educational institutions as students increasingly use AI tools to submit assignments.

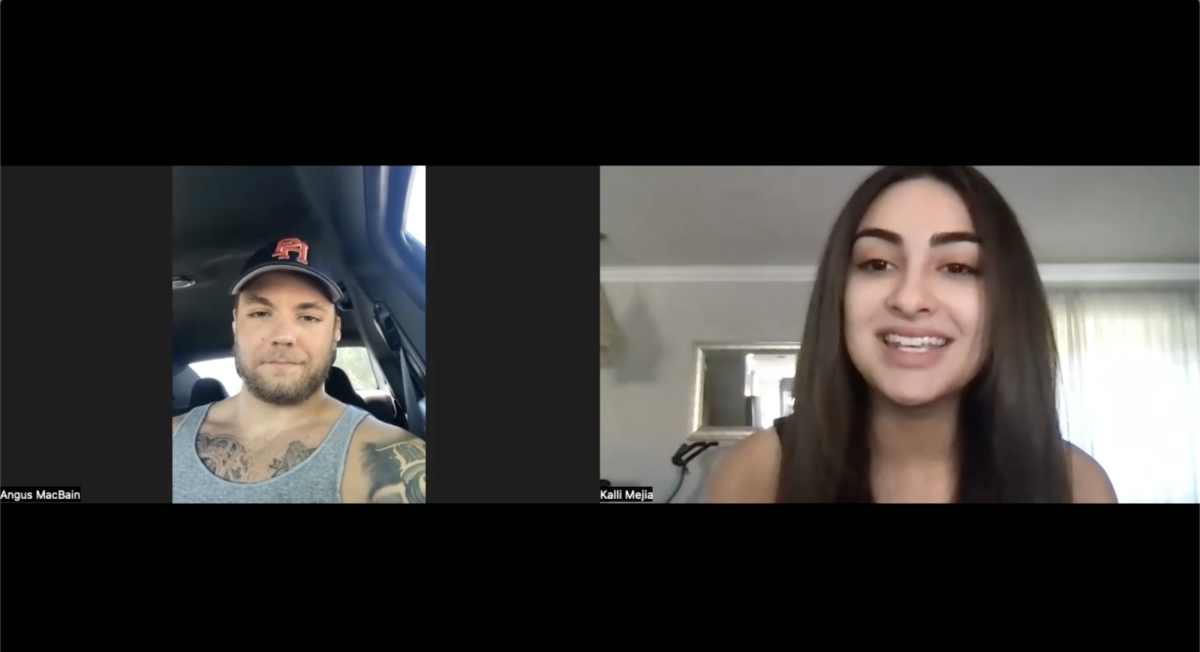

I saw this first hand in a hybrid multimedia journalism course I took this semester at Diablo Valley College, where students were tasked with uploading self-taped videos analyzing American media outlets. Instead, several assignments from my colleagues appeared to contain AI-generated videos that clearly lacked the personal ouch expected from such a project.

The students’ assignments, which should have reflected an analysis and critical thinking, stood out due to their generic tone and oddly structured responses—telltale signs of AI-generated content. Yet for many in higher education today, this kind of “work” is becoming more commonplace and accepted.

“Why not use AI?” said Dr. Jose Lizárraga, a visiting researcher at UC Berkeley who is working on a project that explores online collaborative pedagogies and AI prejudices. “If that’s all you want from me—if you don’t want me to engage in critical thinking—then why would you blame a student for trying to be expedient in the use of AI?”

As more students turn to AI for convenience, educators nationwide are now being forced to confront deeper questions about ethics, boundaries and the role of AI in education.

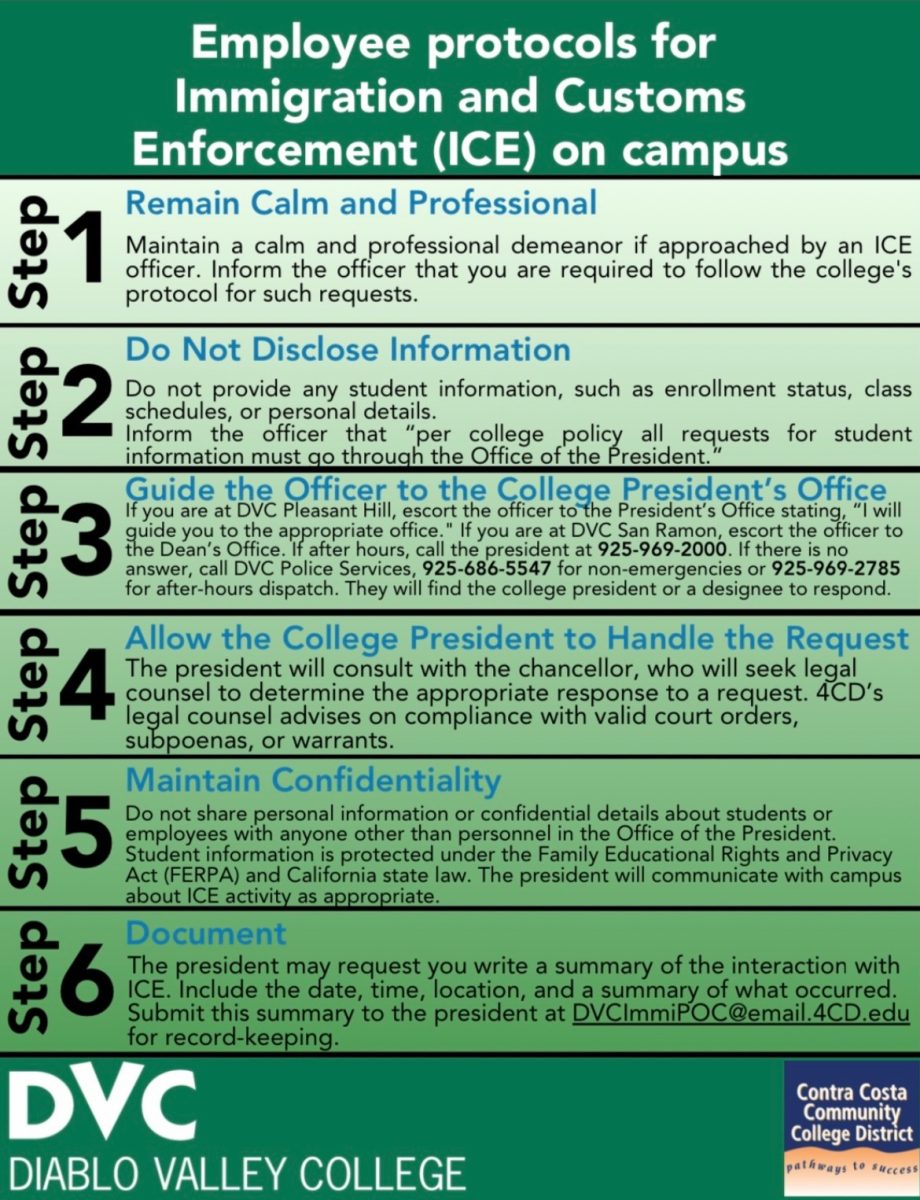

Here in the Contra Costa Community College District (4CD), faculty and administrators are actively working to better understand and teach responsible AI use.

At this year’s 4CD AI Summit held on April 11 at DVC, titled Culturally Responsive Teaching and Learning in the Age of AI, educators gathered to discuss how artificial intelligence is reshaping the education system.

According to one attendee/participant?, DVC English professor Rayshell Clapper, who is also the school’s digital equity and distance educator coordinator.

“The caution is understanding the ethics of AI—not only in how you use it, but also in how it’s using you.”

Clapper emphasized that AI’s growing presence in the classroom requires clear communication between faculty and students.

“One thing I did last semester is I drafted template language for AI policies in instructors’ syllabi,” Clapper explained. “The encouragement was to have a policy in your syllabus so that it’s transparent to students, so they know from class to class, professor to professor, what the policy is, so they have those boundaries.”

She acknowledged that drawing a firm line on the use of AI in the classroom remains complicated.

“I don’t think we can draw a line, unless an entire discipline, like every professor on this campus, says no AI. Then that line is drawn but…that’s not going to happen at DVC.”

Beyond classroom concerns, the infiltration of AI bots is also creating administrative headaches. As an open enrollment community college, DVC is required by law to admit any California resident possessing a highschool diploma or equivalent, which has created an environment where distinguishing between real and “ghost” students is a growing challenge.

“Because of that… anybody can have access to higher education…but also so can ghost bots. And so then the burden comes onto the college to figure out who’s a ghost bot and we do the best we can but also, it can be really difficult.”

Also speaking at the AI event last month was engineering professor and interim dean of institutional research and planning at Contra Costa College, Chao Lui, who led a workshop on AI literacy.

“AI literacy [is] a skill I think that everybody should have,” said Lui. “The important part is about prompting—it’s like how do you instruct the machine to respond to your answer, and how do you ask questions.”

Lui said being able to interact and communicate effectively with AI systems is quickly becoming a foundational skill, comparable to being able to research and write academically.

“A lot of people don’t know how to use AI and the reason is that they don’t know how to ask questions to the AI tool,” he said. “So I advocate we should open a class about AI literacy to everyone—not just for the students, it could be anyone around the community.

“If they can use their AI tools to help their work, or their life, then this is the class for them,” Lui said.

Donna Smith • May 8, 2025 at 1:55 pm

Thank you Isaac for presenting such a well-researched news piece here. As you mentioned, AI use can often stand out in course work, shared through Canvas, but as it evolves, it becomes ever more difficult to determine whether it is ‘real’ student work, or synthetic. Interactions in classrooms, between students and instructors and the demand for a hands-on participation in coursework help, but it is still an individual thing to assess if student work is theirs or manufactured as theirs.